Configure actions-runner-controller with proxy in private EKS cluster

Goals of this post

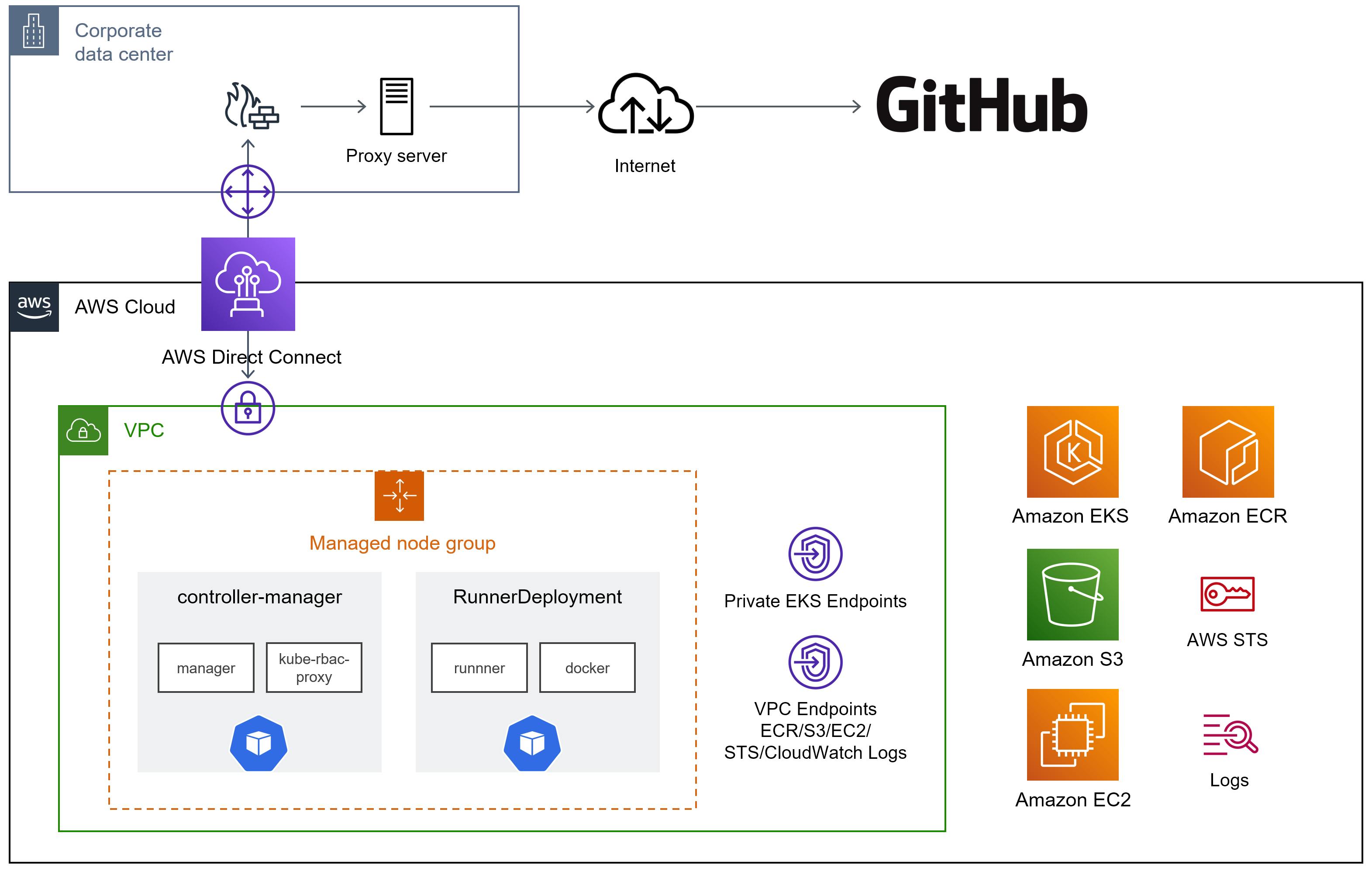

- Run actions-runner-controller on Private EKS cluster

- Communication with GitHub API is through on-premises proxy server

This post is validated with the following configuration.

- Amazon EKS: Kubernetes 1.22

- actions-runner-controller: v0.23.0

- cert-manager v1.8.0

- eksctl: 0.93.0

- kubectl: v1.21.2

- AWS CLI: 2.5.7

What is actions-runner-controller?

Controller for operating GitHub Actions self-hosted runners on Kubernetes cluster.

github.com/actions-runner-controller/action..

The following describes the flow of building actions-runner-controller on private EKS cluster.

Create private EKS Cluster

Set up kubectl, eksctl, and AWS CLI in your working environment in advance. See below for detailed instructions.

kubectl: kubernetes.io/docs/tasks/tools

eksctl: docs.aws.amazon.com/eks/latest/userguide/ek..

AWS CLI: docs.aws.amazon.com/cli/latest/userguide/ge..

Please refer to the following document to create a private cluster with eksctl.

eksctl.io/usage/eks-private-cluster

In this case, created an EKS cluster using the following config file.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: actions-runner

region: ap-northeast-1

version: "1.22"

privateCluster:

enabled: true

skipEndpointCreation: true

vpc:

subnets:

private:

ap-northeast-1a:

id: subnet-xxxxxxxxxxxxxxxxx

ap-northeast-1c:

id: subnet-yyyyyyyyyyyyyyyyy

ap-northeast-1d:

id: subnet-zzzzzzzzzzzzzzzzz

For a private EKS cluster, the following VPC endpoints are required.

- ECR (

ecr.api,ecr.dkr) - S3 (Gateway)

- EC2

- STS

- CloudWatch Logs

If you enable a private cluster with eksctl (privateCluster.enabled), these endpoints will be created automatically.

In this case, I had a requirement to use a VPC already connected via Direct Connect, so skip endpoint creation with privateCluster.skipEndpointCreation to use the existing VPC and endpoints.

NOTE: if you specify an existing VPC, eksctl will edit the route table.

However, if the subnet is associated with the main route table, eksctl will not edit the route table and will fail to create the cluster. Therefore, it is necessary to create and associate a route table explicitly.

eksctl communicates with the AWS API via a proxy server. However, communication to private EKS cluster endpoints does not use a proxy server, so the following environment variable should be set.

export https_proxy=http://xxx.xxx.xxx.xxx:yyyy

export no_proxy=.eks.amazonaws.com

The following command creates a cluster. Creating a private cluster takes longer than creating a regular cluster. This configuration took about 22 minutes, but if you are creating VPC or VPC endpoints, it will take even longer, so adjust the timeout value.

$ eksctl create cluster --timeout 30m -f private-cluster.yaml

Register Managed Node Groups

Add UserData to the launch template used by the managed node group and configure the Docker daemon to use a proxy.

This template is based on the following blog.

yomon.hatenablog.com/entry/2020/10/eks_dock..

AWSTemplateFormatVersion: "2010-09-09"

Parameters:

ClusterIp:

Type: String

ProxyIP:

Type: String

ProxyPort:

Type: Number

SshKeyPairName:

Type: String

Resources:

EKSManagedNodeInPrivateNetworkLaunchTemplate:

Type: AWS::EC2::LaunchTemplate

Properties:

LaunchTemplateName: eks-managednodes-in-private-network

LaunchTemplateData:

InstanceType: t3.small

KeyName: !Ref SshKeyPairName

TagSpecifications:

- ResourceType: instance

Tags:

- Key: Name

Value: actions-runner-nodegroup

- ResourceType: volume

Tags:

- Key: Name

Value: actions-runner-nodegroup

UserData: !Base64

"Fn::Sub": |

Content-Type: multipart/mixed; boundary="==BOUNDARY=="

MIME-Version: 1.0

--==BOUNDARY==

Content-Type: text/cloud-boothook; charset="us-ascii"

#Set the proxy hostname and port

PROXY=${ProxyIP}:${ProxyPort}

MAC=$(curl -s http://169.254.169.254/latest/meta-data/mac/)

VPC_CIDR=$(curl -s http://169.254.169.254/latest/meta-data/network/interfaces/macs/$MAC/vpc-ipv4-cidr-blocks | xargs | tr ' ' ',')

#Create the docker systemd directory

mkdir -p /etc/systemd/system/docker.service.d

#Configure docker with the proxy

cloud-init-per instance docker_proxy_config tee <<EOF /etc/systemd/system/docker.service.d/http-proxy.conf >/dev/null

[Service]

Environment="HTTP_PROXY=http://$PROXY"

Environment="HTTPS_PROXY=http://$PROXY"

Environment="NO_PROXY=${ClusterIp},$VPC_CIDR,localhost,127.0.0.1,169.254.169.254,.internal,s3.amazonaws.com,.s3.ap-northeast-1.amazonaws.com,api.ecr.ap-northeast-1.amazonaws.com,dkr.ecr.ap-northeast-1.amazonaws.com,ec2.ap-northeast-1.amazonaws.com,ap-northeast-1.eks.amazonaws.com"

EOF

#Reload the daemon and restart docker to reflect proxy configuration at launch of instance

cloud-init-per instance reload_daemon systemctl daemon-reload

cloud-init-per instance enable_docker systemctl enable --now --no-block docker

--==BOUNDARY==

After creating the launch template, create a Managed Node Group with the following config file.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: actions-runner

region: ap-northeast-1

managedNodeGroups:

- name: t3s-proxy

desiredCapacity: 1

privateNetworking: true

launchTemplate:

id: lt-xxxxxxxxxxxxxxxxx

version: "1"

Run create nodegroup.

$ eksctl create nodegroup -f nodegroup.yaml

Installing actions-runner-controller

actions-runner-controller uses cert-manager to manage certificates for Admission Webhooks, so it must be installed in advance.

$ kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.8.0/cert-manager.yaml

To use actions-runner-controller in a proxy environment, it is necessary to configure the proxy server information in the following three locations.

- manager container of controller-manager

- runner container of RunnerDeployment

- sidecar (dind) container of RunnerDeployment

I deployed actions-runner-controller with kubectl this time.

1 sets the https_proxy environment variable to the Deployment definition of controller-manager.

2 and 3 set the https_proxy environment variable to the definition of RunnerDeployment.

Edit the manifest file of actions-runner-controller as follows.

33836 spec:

33837 containers:

33838 - args:

33839 - --metrics-addr=127.0.0.1:8080

33840 - --enable-leader-election

33841 command:

33842 - /manager

31843 env:

31844 - name: GITHUB_TOKEN

31845 valueFrom:

31846 secretKeyRef:

31847 key: github_token

31848 name: controller-manager

31849 optional: true

31850 - name: GITHUB_APP_ID

31851 valueFrom:

31852 secretKeyRef:

31853 key: github_app_id

31854 name: controller-manager

31855 optional: true

31856 - name: GITHUB_APP_INSTALLATION_ID

31857 valueFrom:

31858 secretKeyRef:

31859 key: github_app_installation_id

31860 name: controller-manager

31861 optional: true

31862 - name: GITHUB_APP_PRIVATE_KEY

31863 value: /etc/actions-runner-controller/github_app_private_key

+ 31864 - name: http_proxy

+ 31865 value: "http://xxx.xxx.xxx.xxx:xxxx"

+ 31866 - name: https_proxy

+ 31867 value: "http://xxx.xxx.xxx.xxx:xxxx"

+ 31868 - name: no_proxy

+ 31869 value: "172.20.0.1,*.eks.amazonaws.com"

31870 image: summerwind/actions-runner-controller:v0.22.3

31871 name: manager

Run kubectl create.

$ kubectl create -f actions-runners-controller.yaml

NOTE: Due to a known issue with Kubernetes, we must use create (or replace when updating) instead of apply.

github.com/actions-runner-controller/action..

GitHub API can be authenticated using the GitHub App or Personal Access Token (PAT).

In this case, we use PAT authentication. Set the appropriate scope for the token depending on the type of runner and create a secret.

kubectl create secret generic controller-manager \

-n actions-runner-system \

--from-literal=github_token=${GITHUB_TOKEN}

NOTE: PAT must be pre-authorized before accessing an Organization with SAML SSO configured.

docs.github.com/ja/enterprise-cloud@latest/..

RunnerDeployment is defined below.

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: runner-deploy

spec:

template:

spec:

enterprise: <enterprise-name>

labels:

- runner-container

env:

- name: RUNNER_FEATURE_FLAG_EPHEMERAL

value: "true"

- name: http_proxy

value: "http://xxx.xxx.xxx.xxx:yyyy"

- name: https_proxy

value: "http://xxx.xxx.xxx.xxx:yyyy"

- name: no_proxy

value: "172.20.0.1,10.1.2.0/24,*.eks.amazonaws.com"

dockerEnv:

- name: http_proxy

value: "http://xxx.xxx.xxx.xxx:yyyy"

- name: https_proxy

value: "http://xxx.xxx.xxx.xxx:yyyy"

- name: no_proxy

value: "172.20.0.1,10.1.2.0/24,*.eks.amazonaws.com"

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: runner-deployment-autoscaler

spec:

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: runner-deploy

minReplicas: 1

maxReplicas: 5

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75'

scaleDownThreshold: '0.25'

scaleUpFactor: '2'

scaleDownFactor: '0.5'

When a container is used in a GitHub Actions job, the docker container for dind, launched as a RunnerDeployment sidecar, is used. Specify dockerEnv so that this dind container can perform image pulls.

After deployment, confirm that the pod is successfully started and is visible from GitHub.

$ kubectl apply -f RunnerDeployment.yaml

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

pod/runner-deploy-xxxxx-xxxxx 2/2 Running 0 53s

If controller-manager does not start properly, check the settings in 1 or GitHub; if Runner does not start properly, check the settings in 2 or 3.